HARC, the Highly-Available Resource Co-allocator, is a system for creating and managing resource reservations. Multiple reservations are made in a single, atomic step. The most common use of HARC is to make advance reservations on multiple supercomputers, for use in a single MPICH-G2 or MPIg metacomputing job. However, HARC is not limited to compute resources (or to making reservations that have the same start time and duration).

This page gives an overview of HARC, and some pointers. For details, and for up-to-date information on HARC, please visit the HARC Wiki at http://wiki.cct.lsu.edu/harc/HARC.

The implementation of HARC was supported in part by the National Science Foundation "EnLIGHTened Computing" project, NSF Award #0509465; and also by the SURA SCOOP Program (ONR N00014-04-1-0721, NOAA NA04NOS4730254).

What is Co-allocation?

Co-allocation (also called Co-scheduling) is the booking of multiple resources in a single (atomic) step, as if they were a single resource. This bundling is important for those classes of applications that need multiple, distributed resources. The most common application classes that benefit from this facility are:

- Meta-computing, where a single parallel application (e.g. an MPI code that scales to thousands of processes) is run across multiple supercomputers, treating them like a single machine; and

- Computational Workflow, where a set of computationally-intensive tasks must all be executed, while respecting dependences between certain tasks.

In both these scenarios, it is desirable to schedule all of the required resources in one step, whether or not the start-times and durations of the individual reservations are the same. (The Meta-computing scenario is really just a special case of the more general Workflow scenario.)

It's worth noting that the term co-allocator and co-scheduler are sometimes used to refer to systems that only deal with the the Meta-computing scenario; HARC is designed to handle both scenarios.

The term co-reservation is also used sometimes, and appears to be synonymous to co-allocation.

How does HARC work?

HARC works as follows.

HARC works as follows.

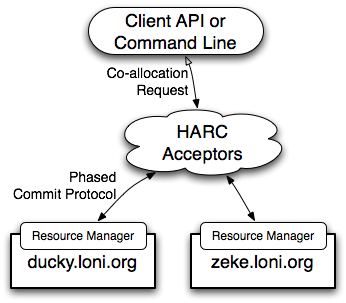

- The client makes a request, from the command line, or another tool, via the Client API.

- The request goes to the HARC Acceptors, which manage the co-allocation process.

- The Acceptors talk to individual Resource Managers which make the individual resource reservations (by talking to the local schedulers).

- The user discovers the outcome of the co-allocation by polling the Acceptors.

The goal of the HARC Architecture was to provide a co-allocation service without creating a single point of failure. HARC is based upon Lamport and Gray's Paxos Commit protocol, which is where the term Acceptor originates. The Acceptors are a distributed group of services, which act in a coordinated fashion. The overall system working normally provided a majority of the Acceptors stay in a working state. This would allow a deployment of 7 Acceptors to have a Mean-Time-To-Failure of a couple of years.

The Java Client API provides a simple interface through which applications can make resource requests. (Status enquiries, canceling, etc. are also provided.) A simple command-line interface is built on top of the API, giving easy access to HARC's functionality. The following example command would try to reserve 8 cores on each of the LONI AIX machines bluedawg and ducky, starting at 12 noon, for one hour.

harc-reserve -c bluedawg.loni.org/8 \

-c ducky.loni.org/8 -s 12:00 -d 1:00

What can I reserve with HARC?

HARC is an extensible system, from the Resource Manager layer, right up to the command-line interface. To date, there are three Resource Managers (RMs) which have been implemented (around a common core of code):

- A Compute RM which can make reservations on a supercomputer, interfacing with the batch scheduler system. This requires that the scheduler is configured to allow reservations. LoadLeveler, PBSPro, LSF, Torque/Maui and Torque/Moab are supported. SDSC's Catalina scheduler is partly supported.

- A Simple Network RM which can reserve dedicated lightpaths on a GMPLS-based optical network, where the topology contains no loops. The module currently supports Calient Diamondwave PXCs.

- The G-lambda HGLW (HARC G-Lambda Wrapper) which allows HARC to control devices on the G-lambda Project's testbed, by interfacing to the G-lambda middleware. This prototype RM was implemented by AIST. The source is not available.

This more complex example reserves time on supercomputers at LSU and MCNC, plus a dedicated lightpath connecting the resources across the EnLIGHTened Computing testbed.

harc-reserve -c santaka.cct.lsu.edu/8 \

-c kite1.enlightenedcomputing.org/8 \

-n EnLIGHTened/BTR-RA1 -s 12:00 -d 1:00

The hope is that other groups are able to develop Resource Managers for new types of resource. This can be done without modification to the rest of the codebase. Unfortunately, there is currently very little documentation on how to do this. If you are interested in doing this, please Contact Us – having someone interested in writing an RM will help motivate us to document this part of the system.

Papers

- J. MacLaren, "HARC: The Highly-Available Resource Co-allocator", in Proceedings of GADA'07, LNCS 4804 (OTM Conferences 2007, Part II), Springer-Verlag, 2007. pp. 1385 to 1402. Available online.

- J. MacLaren, "Co-allocation of Compute and Network resources using HARC", in Proceedings of "Lighting the Blue Touchpaper for UK e- Science: closing conference of ESLEA Project". PoS(ESLEA)016, 2007. Available online.

Participants

- Archit Kulshrestha (CCT)

- Dr Jon MacLaren (CCT)

- Daniel S. Katz (CCT)

- Andrei Hutanu (CCT)

Projects Using HARC

- The EnLIGHTened Computing Project.

- GENIUS (Grid Enabled Neurosurgical Imaging Using Simulation).

Deployments of HARC

HARC is currently deployed on subsets of LONI, TeraGrid, UK NGS and NorthWest Grid. For details, please see the Deployments page on the HARC Wiki.

News

- Sep 2007 HARC was used in part of the GDOM demo at GLIF 2007.

- Aug 2007 HARC was used in the GENIUS Project demos at AHM'07.

- Nov 2006 The demo from GLIF 2006 was repeated at SC'06.

- Sep 2006 HARC was used in the largest co-allocation demonstration to date at GLIF 2006. See the GLIF Press Release and also the write-ups from Grid Today, and Science Grid This Week.

- Nov 2005 The iGrid demo was repeated at SC'05.

- Sep 2005 HARC was used in the successful distributed Visualization demo at iGrid 2005.

Other HARC Resources

Contact

Please send all questions and queries on HARC to harc@cct.lsu.edu.